Dynamic UI (1.0)

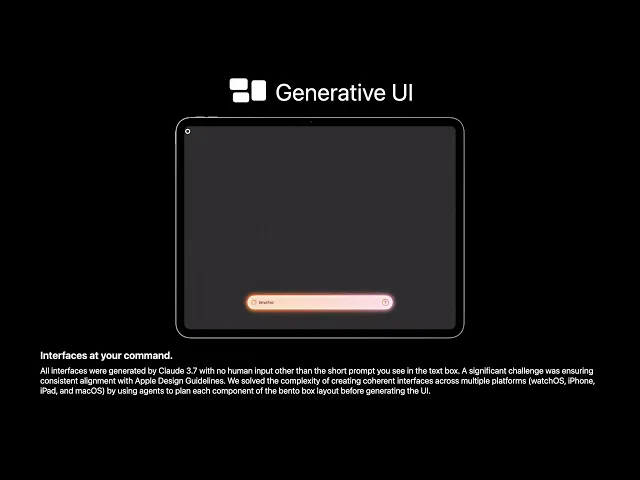

Develop an LLM-based system capable of creating iOS and visionOS apps instantly.

This enables immediate use cases in Accessibility, localization, and personalized workflows. Originally aimed for a distant iteration of visionOS, it emphasizes the need for highly personalized software in a future with ubiquitous AR devices, ensuring user comfort and utility in daily routines.

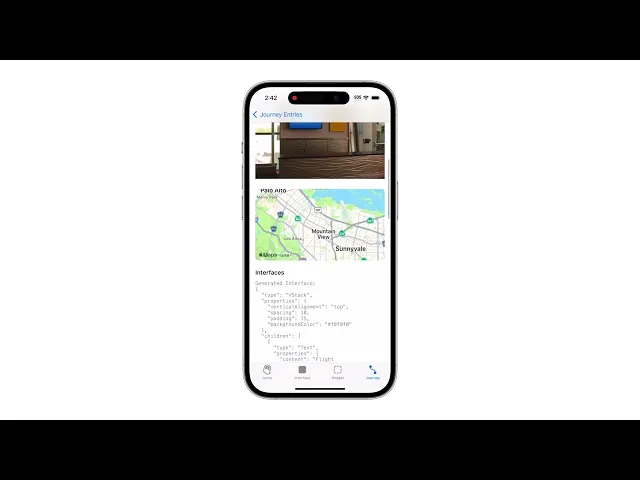

JSON → SwiftUI

2 weeks

GPT 3.5

Year

2023

Platform

iOS + visionOS

Challenge

This project began as a nights-and-weekends experiment, not as part of my official work. I was driven by a simple but ambitious idea: What if a user could describe a UI and have it instantly built for them, live, on-device? Early attempts using GPT-3.5 quickly revealed major obstacles. The model was unstable, often hallucinating interface logic or returning invalid JSON. SwiftUI requires precision, and there was no margin for error. To solve this, I developed a custom JSON schema—rigid, yet expressive—tailored specifically to describe nested SwiftUI structures. This format became the contract between the language model and the recursive parser I built, shielding the frontend from GPT’s volatility.

Results

The result was a functioning real-time interface engine for iOS that translates user prompts into custom SwiftUI layouts—reliably. Thanks to the custom JSON contract, the system can parse even imperfect LLM output into stable, recursive SwiftUI views. I tested it with a variety of use cases: from personalized video editing toolbars, to accessibility modifications like larger tap targets, to UI localization that flips and translates for RTL languages like Arabic. Despite GPT-3.5’s limitations, the system remained robust—demonstrating the viability of generative UIs even before the underlying models were fully reliable.

Benefits

What started as a side project ended up opening the door to a post-app future: a world where software is not pre-built, but generated live around the user. The challenges—model instability, JSON unpredictability, real-time rendering—were significant, especially working solo, outside official hours. But the upside is enormous. As models improve and on-device capabilities grow, this approach could reshape interface development across platforms—particularly in AR, where personalization is critical. The core insight still holds: even imperfect LLMs can generate useful software, if we give them the right guardrails.