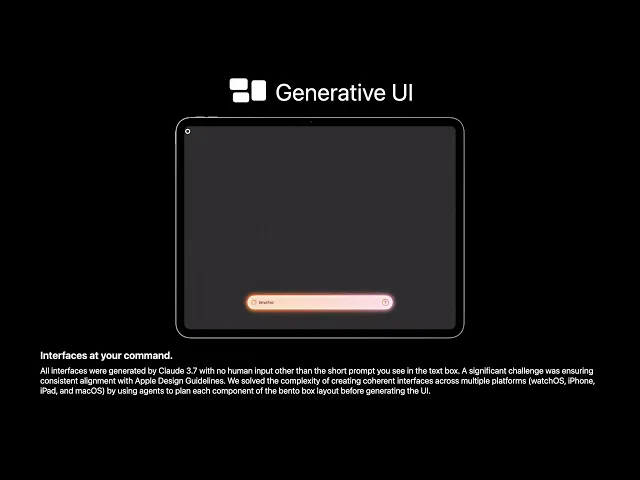

Generative Computing

"The last program ever written will be that which creates other programs"

Model used

GPT 4o + 4o-mini

300+ people

Machine Learning Summit

Project length

2 months

Year

Early 2024

Challenge

In late 2023, during my time at Apple, I found myself increasingly curious about the emerging space of machine learning and its potential to redefine user interaction paradigms. This curiosity sparked a side project—DynamicUI, which would later evolve into what I now call Generative Computing.

The core challenge I set out to solve was:

What would a user interface look like if it could be generated dynamically, on the fly, with no compiled code, using only a prompt and a neural network?

This was uncharted territory. Unlike traditional development, which requires writing and compiling code for every possible state or interaction, I wanted to build a system that could react to multimodal input (text, audio, images, video) in real-time, generating interactive interfaces as naturally as a conversation. All while staying within the bounds of platform guidelines—no private APIs, no hacks, no compilation.

This wasn’t an official Apple project. I developed it entirely on my own time, with no internal SDKs or tools—just pure curiosity and a deep desire to push boundaries.

Results

Despite working solo and outside of official channels, the project gained considerable traction internally. The prototype was presented at Apple in internal seminars to an audience of over 300 people, and the response was overwhelmingly positive.

Here are some of the outcomes:

New Interaction Model: Introduced a compelling new paradigm of UI generation powered entirely by LLMs and JSON—no compiled code required.

Internal Recognition: Sparked deep cross-functional interest, leading to further exploration in official capacities and influencing follow-up projects I later led.

Scalable Concept: The framework respected App Store guidelines and could hypothetically be submitted and approved today, showing how future-forward ideas can still fit within present-day systems.

AppShack Launch: Work began to bring the tool into more teams at Apple for further testing and application.

Most importantly, Generative Computing helped plant the seeds for a new way of thinking about computation—one where neural networks serve not just as assistants, but as the engines of software generation itself.

Deep Dive

Generative Computing is a new category of computation—distinct from classical and quantum computing. Instead of relying on compiled code or binary states, generative computers are powered by neural networks, where inputs flow directly through the model to produce outputs (in this case, user interfaces). There’s no compilation step, no Swift, no Objective-C. The system uses a platform-agnostic JSON format to define UI elements and modifiers, which are parsed into real-time views on the device.

The prototype uses:

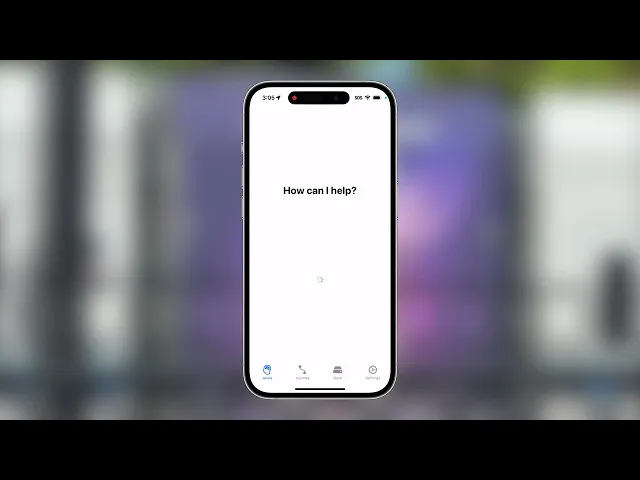

SwiftUI on iOS as the front-end rendering engine.

OpenAI’s LLM API to generate interface descriptions and react to user input.

Multimodal Input Handling: Users can interact through voice, images, text, and even video.

Tool Integration: Functional elements are added dynamically (e.g., tapping a UI switch triggers a HomeKit command).

This system also explored advanced research topics:

UI Atomization: Breaking complex UIs into semantically meaningful, generatable components.

HIG Adherence: Ensuring that generated interfaces stay consistent with Apple’s Human Interface Guidelines.

Consistency in Generation: Maintaining visual and behavioral consistency across multiple prompts and interactions.

Why it matters

Generative Computing allows for experiences that adapt to the user’s needs in real time. Need to label laundry and pair with smart devices? Just describe what you want. Looking for nearby dinner spots? The interface generates itself, complete with maps, filters, and interactive controls. No pre-coded views, no traditional dev cycles—just intent-to-interface.

Think of it as the “right-brain” of computing—creative, adaptive, contextual—working in harmony with the more rigid, logical “left-brain” of classical systems.

This project remains one of the most meaningful I’ve built. It challenged what I thought was possible, gave rise to new research directions, and ultimately helped shape a broader vision for how humans and machines might collaborate to create experiences—on the fly, on device, and on demand.